1. Install RPMs required for Hadoop Client:

at-3.1.13-22.el7.x86_64.rpm

avahi-libs-0.6.31-17.el7.x86_64.rpm

avro-libs-1.7.6+cdh5.13.0+135-1.cdh5.13.0.p0.34.el7.noarch.rpm

bc-1.06.95-13.el7.x86_64.rpm

bigtop-jsvc-0.6.0+cdh5.13.0+911-1.cdh5.13.0.p0.34.el7.x86_64.rpm

bigtop-tomcat-0.7.0+cdh5.13.0+0-1.cdh5.13.0.p0.34.el7.noarch.rpm

bigtop-utils-0.7.0+cdh5.13.0+0-1.cdh5.13.0.p0.34.el7.noarch.rpm

cups-client-1.6.3-29.el7.x86_64.rpm

cups-libs-1.6.3-29.el7.x86_64.rpm

ed-1.9-4.el7.x86_64.rpm

flume-ng-1.6.0+cdh5.13.0+169-1.cdh5.13.0.p0.34.el7.noarch.rpm

hadoop-0.20-mapreduce-2.6.0+cdh5.13.0+2639-1.cdh5.13.0.p0.34.el7.x86_64.rpm

hadoop-2.6.0+cdh5.13.0+2639-1.cdh5.13.0.p0.34.el7.x86_64.rpm

hadoop-client-2.6.0+cdh5.13.0+2639-1.cdh5.13.0.p0.34.el7.x86_64.rpm

hadoop-hdfs-2.6.0+cdh5.13.0+2639-1.cdh5.13.0.p0.34.el7.x86_64.rpm

hadoop-mapreduce-2.6.0+cdh5.13.0+2639-1.cdh5.13.0.p0.34.el7.x86_64.rpm

hadoop-yarn-2.6.0+cdh5.13.0+2639-1.cdh5.13.0.p0.34.el7.x86_64.rpm

hive-1.1.0+cdh5.13.0+1269-1.cdh5.13.0.p0.34.el7.noarch.rpm

hive-jdbc-1.1.0+cdh5.13.0+1269-1.cdh5.13.0.p0.34.el7.noarch.rpm

jdk-8u144-linux-x64.rpm

kite-1.0.0+cdh5.13.0+145-1.cdh5.13.0.p0.34.el7.noarch.rpm

krb5-workstation-1.15.1-8.el7.x86_64.rpm

libkadm5-1.15.1-8.el7.x86_64.rpm

m4-1.4.16-10.el7.x86_64.rpm

mailx-12.5-16.el7.x86_64.rpm

nmap-ncat-6.40-7.el7.x86_64.rpm

parquet-1.5.0+cdh5.13.0+191-1.cdh5.13.0.p0.34.el7.noarch.rpm

parquet-format-2.1.0+cdh5.13.0+18-1.cdh5.13.0.p0.34.el7.noarch.rpm

patch-2.7.1-8.el7.x86_64.rpm

psmisc-22.20-15.el7.x86_64.rpm

redhat-lsb-core-4.1-27.el7.centos.1.x86_64.rpm

redhat-lsb-submod-security-4.1-27.el7.centos.1.x86_64.rpm

sentry-1.5.1+cdh5.13.0+410-1.cdh5.13.0.p0.34.el7.noarch.rpm

solr-4.10.3+cdh5.13.0+519-1.cdh5.13.0.p0.34.el7.noarch.rpm

spark-core-1.6.0+cdh5.13.0+530-1.cdh5.13.0.p0.34.el7.noarch.rpm

spark-python-1.6.0+cdh5.13.0+530-1.cdh5.13.0.p0.34.el7.noarch.rpm

spax-1.5.2-13.el7.x86_64.rpm

time-1.7-45.el7.x86_64.rpm

unzip-6.0-16.el7.x86_64.rpm

wget-1.14-15.el7_4.1.x86_64.rpm

zip-3.0-11.el7.x86_64.rpm

zookeeper-3.4.5+cdh5.13.0+118-1.cdh5.13.0.p0.34.el7.x86_64.rpm

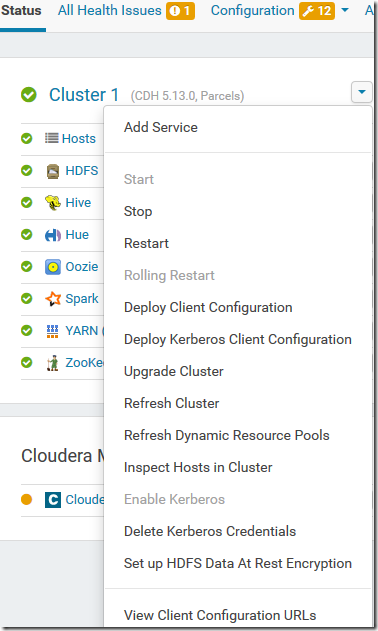

2. Download Hadoop Cluster Configuration:

[root@localhost ~]# curl -o hive.zip http://cdh-vm.dbaglobe.com:7180/cmf/services/16/client-config

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 7762 100 7762 0 0 68854 0 --:--:-- --:--:-- --:--:— 69303

[root@localhost ~]# unzip -l hive.zip

Archive: hive.zip

Length Date Time Name

--------- ---------- ----- ----

557 12-02-2017 02:39 hive-conf/hadoop-env.sh

310 12-02-2017 02:39 hive-conf/log4j.properties

3729 12-02-2017 02:39 hive-conf/yarn-site.xml

5764 12-02-2017 02:39 hive-conf/hive-site.xml

315 12-02-2017 02:39 hive-conf/ssl-client.xml

1594 12-02-2017 02:39 hive-conf/topology.py

0 12-02-2017 02:39 hive-conf/redaction-rules.json

1132 12-02-2017 02:39 hive-conf/hive-env.sh

2549 12-02-2017 02:39 hive-conf/hdfs-site.xml

5194 12-02-2017 02:39 hive-conf/mapred-site.xml

3863 12-02-2017 02:39 hive-conf/core-site.xml

204 12-02-2017 02:39 hive-conf/topology.map

--------- -------

25211 12 files

[root@localhost ~]# unzip hive.zip

[root@localhost ~]# cp hive-conf/* /etc/hadoop/conf/

[root@localhost ~]# chmod +x /etc/hadoop/conf/*.sh

[root@localhost ~]# chmod +x /etc/hadoop/conf/*.py

3. Setup Kerberos Client

- copy /etc/krb5.conf from cluster

- copy keytab needed from server

[donghua@localhost ~]$ klist

Ticket cache: FILE:/tmp/krb5cc_1000

Default principal: donghua@DBAGLOBE.COM

Valid starting Expires Service principal

12/02/2017 03:05:33 12/03/2017 03:05:33 krbtgt/DBAGLOBE.COM@DBAGLOBE.COM

4. Test HDFS Client

[donghua@localhost ~]$ hdfs dfs -ls /user/donghua

Found 6 items

drwx------ - donghua donghua 0 2017-12-01 21:00 /user/donghua/.Trash

drwxr-xr-x - donghua donghua 0 2017-12-01 23:40 /user/donghua/.sparkStaging

drwx------ - donghua donghua 0 2017-12-01 19:09 /user/donghua/.staging

-rw-r--r-- 1 donghua donghua 46837865 2017-12-01 10:17 /user/donghua/IOTDataDemo.csv

drwxr-xr-x - donghua donghua 0 2017-12-01 20:35 /user/donghua/speedByDay

drwxr-xr-x - donghua donghua 0 2017-12-01 07:03 /user/donghua/tmp

[donghua@localhost ~]$ hdfs dfs -rm -r /user/donghua/speedByDay

17/12/02 03:38:18 INFO fs.TrashPolicyDefault: Moved: 'hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/speedByDay' to trash at: hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.Trash/Current/user/donghua/speedByDay

5. Test Hive beeline

[donghua@localhost ~]$ beeline

which: no hbase in (/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/home/donghua/.local/bin:/home/donghua/bin)

Beeline version 1.1.0-cdh5.13.0 by Apache Hive

beeline> !connect jdbc:hive2://cdh-vm.dbaglobe.com:10000/default;principal=hive/cdh-vm.dbaglobe.com@DBAGLOBE.COM

scan complete in 1ms

Connecting to jdbc:hive2://cdh-vm.dbaglobe.com:10000/default;principal=hive/cdh-vm.dbaglobe.com@DBAGLOBE.COM

Connected to: Apache Hive (version 1.1.0-cdh5.13.0)

Driver: Hive JDBC (version 1.1.0-cdh5.13.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://cdh-vm.dbaglobe.com:10000/def> select count(*) from iotdatademo;

INFO : Compiling command(queryId=hive_20171202031414_9fe84dde-1b02-492d-a135-9949fb76aac8): select count(*) from iotdatademo

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:_c0, type:bigint, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20171202031414_9fe84dde-1b02-492d-a135-9949fb76aac8); Time taken: 0.123 seconds

INFO : Executing command(queryId=hive_20171202031414_9fe84dde-1b02-492d-a135-9949fb76aac8): select count(*) from iotdatademo

INFO : Query ID = hive_20171202031414_9fe84dde-1b02-492d-a135-9949fb76aac8

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks determined at compile time: 1

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=<number>

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=<number>

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=<number>

INFO : number of splits:1

INFO : Submitting tokens for job: job_1512169991692_0018

INFO : Kind: HDFS_DELEGATION_TOKEN, Service: 192.168.56.10:8020, Ident: (token for donghua: HDFS_DELEGATION_TOKEN owner=donghua, renewer=yarn, realUser=hive/cdh-vm.dbaglobe.com@DBAGLOBE.COM, issueDate=1512202491680, maxDate=1512807291680, sequenceNumber=82, masterKeyId=6)

INFO : Kind: HIVE_DELEGATION_TOKEN, Service: HiveServer2ImpersonationToken, Ident: 00 07 64 6f 6e 67 68 75 61 07 64 6f 6e 67 68 75 61 25 68 69 76 65 2f 63 64 68 2d 76 6d 2e 64 62 61 67 6c 6f 62 65 2e 63 6f 6d 40 44 42 41 47 4c 4f 42 45 2e 43 4f 4d 8a 01 60 16 4a 89 f0 8a 01 60 3a 57 0d f0 15 01

INFO : The url to track the job: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0018/

INFO : Starting Job = job_1512169991692_0018, Tracking URL = http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0018/

INFO : Kill Command = /opt/cloudera/parcels/CDH-5.13.0-1.cdh5.13.0.p0.29/lib/hadoop/bin/hadoop job -kill job_1512169991692_0018

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

INFO : 2017-12-02 03:15:01,414 Stage-1 map = 0%, reduce = 0%

INFO : 2017-12-02 03:15:06,670 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.87 sec

INFO : 2017-12-02 03:15:12,988 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.61 sec

INFO : MapReduce Total cumulative CPU time: 3 seconds 610 msec

INFO : Ended Job = job_1512169991692_0018

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 3.61 sec HDFS Read: 46846159 HDFS Write: 7 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 seconds 610 msec

INFO : Completed executing command(queryId=hive_20171202031414_9fe84dde-1b02-492d-a135-9949fb76aac8); Time taken: 23.535 seconds

INFO : OK

+---------+--+

| _c0 |

+---------+--+

| 864010 |

+---------+--+

1 row selected (23.799 seconds)

0: jdbc:hive2://cdh-vm.dbaglobe.com:10000/def>

6. Test Pyspark

[donghua@localhost ~]$ pyspark

Python 2.7.5 (default, Aug 4 2017, 00:39:18)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-16)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/flume-ng/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/parquet/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/avro/avro-tools-1.7.6-cdh5.13.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

17/12/02 03:18:16 WARN util.Utils: Your hostname, localhost.localdomain resolves to a loopback address: 127.0.0.1; using 10.0.2.15 instead (on interface enp0s3)

17/12/02 03:18:16 WARN util.Utils: Set SPARK_LOCAL_IP if you need to bind to another address

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Python version 2.7.5 (default, Aug 4 2017 00:39:18)

SparkContext available as sc, HiveContext available as sqlContext.

>>> sc.textFile("/user/donghua/IOTDataDemo.csv") \

... .filter(lambda line: line[0:9] <> "StationID") \

... .map(lambda line: (line.split(",")[3],(float(line.split(",")[4]),1))) \

... .reduceByKey(lambda a,b: (a[0]+b[0],a[1]+b[1])) \

... .mapValues(lambda v: v[0]/v[1]) \

... .sortByKey() \

... .collect()

17/12/02 03:18:50 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[(u'0', 80.42217204861018), (u'1', 80.4242077305864), (u'2', 80.516892013888), (u'3', 80.42997673611163), (u'4', 80.62740798611237), (u'5', 80.49621712963015), (u'6', 80.54539832175986)]

>>>

7. Test spark-submit for spark application

[donghua@localhost ~]$ spark-submit --master yarn-master --deploy-mode cluster speedByDay.py /user/donghua/IOTDataDemo.csv

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/flume-ng/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/parquet/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/avro/avro-tools-1.7.6-cdh5.13.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

17/12/02 08:18:06 INFO client.RMProxy: Connecting to ResourceManager at cdh-vm.dbaglobe.com/192.168.56.10:8032

17/12/02 08:18:06 INFO yarn.Client: Requesting a new application from cluster with 1 NodeManagers

17/12/02 08:18:06 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (1536 MB per container)

17/12/02 08:18:06 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead

17/12/02 08:18:06 INFO yarn.Client: Setting up container launch context for our AM

17/12/02 08:18:06 INFO yarn.Client: Setting up the launch environment for our AM container

17/12/02 08:18:06 INFO yarn.Client: Preparing resources for our AM container

17/12/02 08:18:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/12/02 08:18:08 INFO yarn.YarnSparkHadoopUtil: getting token for namenode: hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0027

17/12/02 08:18:08 INFO hdfs.DFSClient: Created token for donghua: HDFS_DELEGATION_TOKEN owner=donghua@DBAGLOBE.COM, renewer=yarn, realUser=, issueDate=1512220688100, maxDate=1512825488100, sequenceNumber=95, masterKeyId=6 on 192.168.56.10:8020

17/12/02 08:18:10 INFO hive.metastore: Trying to connect to metastore with URI thrift://cdh-vm.dbaglobe.com:9083

17/12/02 08:18:10 INFO hive.metastore: Opened a connection to metastore, current connections: 1

17/12/02 08:18:10 INFO hive.metastore: Connected to metastore.

17/12/02 08:18:10 INFO hive.metastore: Closed a connection to metastore, current connections: 0

17/12/02 08:18:10 INFO yarn.YarnSparkHadoopUtil: HBase class not found java.lang.ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration

17/12/02 08:18:10 INFO yarn.Client: Uploading resource file:/usr/lib/spark/lib/spark-assembly-1.6.0-cdh5.13.0-hadoop2.6.0-cdh5.13.0.jar -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0027/spark-assembly-1.6.0-cdh5.13.0-hadoop2.6.0-cdh5.13.0.jar

17/12/02 08:18:12 INFO yarn.Client: Uploading resource file:/home/donghua/speedByDay.py -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0027/speedByDay.py

17/12/02 08:18:12 INFO yarn.Client: Uploading resource file:/usr/lib/spark/python/lib/pyspark.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0027/pyspark.zip

17/12/02 08:18:12 INFO yarn.Client: Uploading resource file:/usr/lib/spark/python/lib/py4j-0.9-src.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0027/py4j-0.9-src.zip

17/12/02 08:18:12 INFO yarn.Client: Uploading resource file:/tmp/spark-11697931-5b1c-459d-8e31-7beb8f7a9510/__spark_conf__515038920517948991.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0027/__spark_conf__515038920517948991.zip

17/12/02 08:18:12 INFO spark.SecurityManager: Changing view acls to: donghua

17/12/02 08:18:12 INFO spark.SecurityManager: Changing modify acls to: donghua

17/12/02 08:18:12 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(donghua); users with modify permissions: Set(donghua)

17/12/02 08:18:13 INFO yarn.Client: Submitting application 27 to ResourceManager

17/12/02 08:18:13 INFO impl.YarnClientImpl: Submitted application application_1512169991692_0027

17/12/02 08:18:14 INFO yarn.Client: Application report for application_1512169991692_0027 (state: ACCEPTED)

17/12/02 08:18:14 INFO yarn.Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.donghua

start time: 1512220693034

final status: UNDEFINED

tracking URL: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0027/

user: donghua

17/12/02 08:18:15 INFO yarn.Client: Application report for application_1512169991692_0027 (state: ACCEPTED)

17/12/02 08:18:16 INFO yarn.Client: Application report for application_1512169991692_0027 (state: ACCEPTED)

17/12/02 08:18:17 INFO yarn.Client: Application report for application_1512169991692_0027 (state: ACCEPTED)

17/12/02 08:18:18 INFO yarn.Client: Application report for application_1512169991692_0027 (state: ACCEPTED)

17/12/02 08:18:19 INFO yarn.Client: Application report for application_1512169991692_0027 (state: ACCEPTED)

17/12/02 08:18:20 INFO yarn.Client: Application report for application_1512169991692_0027 (state: ACCEPTED)

17/12/02 08:18:21 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:21 INFO yarn.Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: 192.168.56.10

ApplicationMaster RPC port: 0

queue: root.users.donghua

start time: 1512220693034

final status: UNDEFINED

tracking URL: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0027/

user: donghua

17/12/02 08:18:22 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:23 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:24 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:25 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:26 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:27 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:28 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:29 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:30 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:31 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:32 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:33 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:34 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:35 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:36 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:37 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:38 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:39 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:40 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:41 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:42 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:43 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:44 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:45 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:46 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:47 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:48 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:49 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:50 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:51 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:52 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:53 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:54 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:55 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:56 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:57 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:58 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:18:59 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:19:00 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:19:01 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:19:02 INFO yarn.Client: Application report for application_1512169991692_0027 (state: RUNNING)

17/12/02 08:19:03 INFO yarn.Client: Application report for application_1512169991692_0027 (state: FINISHED)

17/12/02 08:19:03 INFO yarn.Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: 192.168.56.10

ApplicationMaster RPC port: 0

queue: root.users.donghua

start time: 1512220693034

final status: SUCCEEDED

tracking URL: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0027/

user: donghua

17/12/02 08:19:03 INFO util.ShutdownHookManager: Shutdown hook called

17/12/02 08:19:03 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-11697931-5b1c-459d-8e31-7beb8f7a9510

[donghua@localhost ~]$

[donghua@localhost ~]$

[donghua@localhost ~]$

[donghua@localhost ~]$

[donghua@localhost ~]$ hdfs dfs -cat /user/donghua/speedByDay/part-*

(u'0', 80.42217204861151)

(u'1', 80.42420773058639)

(u'2', 80.516892013888)

(u'3', 80.42997673611161)

(u'4', 80.62740798611237)

(u'5', 80.49621712962933)

(u'6', 80.5453983217595)

There are no aeronautical organizations visiting our school and there is zero percent chance that I would get up a vocation in aeronautical. data science course in pune

ReplyDeleteGreat post i must say and thanks for the information.

ReplyDeletedigital marketing course

great post, thank you for posting the content.

ReplyDeletedata science course

Attend The Machine Learning course in Bangalore From ExcelR. Practical Machine Learning course in Bangalore Sessions With Assured Placement Support From Experienced Faculty. ExcelR Offers The Machine Learning course in Bangalore.

ReplyDeleteMachine Learning course in Bangalore

ReplyDeleteSuch a very useful article. Very interesting to read this article.I would like to thank you for the efforts you had made for writing this awesome article. I would like to state about something which creates curiosity in knowing more about it. It is a part of our daily routine life which we usually don`t notice in all the things which turns the dreams in to real experiences. Back from the ages, we have been growing and world is evolving at a pace lying on the shoulder of technology."data science training in hyderabad" will be a great piece added to the term technology. Cheer for more ideas & innovation which are part of evolution.

I like viewing web sites which comprehend the price of delivering the excellent useful resource free of charge. I truly adored reading your posting. Thank you!

ReplyDeleteData science course in Mumbai

Such a very useful article. Very interesting to read this article. I have learn some new information.thanks for sharing. ExcelR

ReplyDeleteAwesome blog. I enjoyed reading your articles. This is truly a great read for me. I have bookmarked it and I am looking forward to reading new articles. Keep up the good work!

ReplyDeletedata analytics course mumbai

Two full thumbs up for this magneficent article of yours. I've really enjoyed reading this article today and I think this might be one of the best article that I've read yet. Please, keep this work going on in the same quality.

ReplyDeletedata science course

360DigiTMG

Really nice and interesting post. I was looking for this kind of information and enjoyed reading this one. Keep posting. Thanks for sharing.

ReplyDeletedata analytics courses

data science interview questions

business analytics courses

data science course in mumbai

The information provided on the site is informative. Looking forward for more such blogs. Thanks for sharing .

ReplyDeleteArtificial Inteligence course in Delhi

AI Course in Delhi

ReplyDeleteExcellent! I love to post a comment that "The content of your post is awesome" Great work!

top data analytics courses in mumbai

Great post i must say and thanks for the information. Education is definitely a sticky subject. However, is still among the leading topics of our time. I appreciate your post and look forward to more.

ReplyDeleteExcelR data analytics courses

wow, great, I was wondering how to cure acne naturally. and found your site by google, learned a lot, now I am a bit clear. I’ve bookmarked your site. keep us updated.

ReplyDelete<a href="https://www.excelr.com/business-analytics-training-in-pune/”> Courses in Business Analytics</a>

I have express a few of the articles on your website now, and I really like your style of blogging.

Just saying thanks will not just be sufficient, for the fantasti c lucidity in your writing. I will instantly grab your rss feed to stay informed of any updates.<a href="https://www.excelr.com/business-analytics-training-in-pune/”> Courses in Business Analytics</a>

ReplyDeleteI really enjoy reading and also appreciate your work.

Data Science Courses I adore your websites way of raising the awareness on your readers.

ReplyDeleteYou completed certain reliable points there. I did a search on the subject and found nearly all persons will agree with your blog.

I just stumbled upon your blog and wanted to say that I have really enjoyed reading your blog posts. ExcelR Data Science Courses Any way I’ll be subscribing to your feed and I hope you post again soon. Big thanks for the useful info.

ReplyDeleteOrganizations like Glassdoor and Harvard University have already declared Data Science as the best job of the 21st century, and since most of the data remain perpetual and ever-increasing, the scope is only going to rise. data science course syllabus

ReplyDeleteSuch a very useful article. Very interesting to read this article. I would like to thank you for the efforts you had made for writing this awesome article.data scientist course in pune with placement

ReplyDeleteI've read this post and if I could I desire to suggest you some interesting things or suggestions. Perhaps you could write next articles referring to this article. I want to read more things about it!

ReplyDeleteData Science courses

Nice blog, thank for sharing with us.

ReplyDeleteData Science Institute in Hyderabad

Extremely overall quite fascinating post. I was searching for this sort of data and delighted in perusing this one. Continue posting. A debt of gratitude is in order for sharing.data science course in rohtak

ReplyDeleteReally fantastic site, I thoroughly enjoyed reading it and learning to expand my knowledge. In the end, this blog aids in the development of specific talents, which in turn aid in their application. I want to thank the blogger for creating such a lovely post and encourage them to keep doing so in the future. Please do Visit at full stack developer course in ghaziabad

ReplyDeleteThanks so much for sharing this article with us! I found it very informative, and I'm sure others will too. Thank you for taking the time to contribute your thoughts and experiences on this topic.

ReplyDeleteData Analytical Course

Thanks so much for sharing this article with us! I found it very informative, and I'm sure others will too. Thank you for taking the time to contribute your thoughts and experiences on this topic.

ReplyDeleteInvestment Banking Course in India

Thanks so much for sharing this article with us! I found it very informative, and I'm sure others will too. Thank you for taking the time to contribute your thoughts and experiences on this topic.

ReplyDeleteInvestment Banking Course in delhi

Thank you for sharing the details about this. It is a great article and I would love to know more!

ReplyDeleteIIM Skills is the best institute to make your career successful with Data Analytics Courses in India. With Excel, Access, and Oracle databases, you can stay up-to-date on current trends and develop innovative solutions.

This article is very informative. It is true that manual deployment of Hadoop client for Cloudera is a must. This can help avoid downtime during upgrades and patching, as well as allowing you to gain more control over your environment.

ReplyDeleteData Analytics Courses in Mumbai