A user must have a valid Kerberos ticket in order to interact with a secure Hadoop cluster. Running any Hadoop command (such as hadoop fs -ls) will fail if you do not have a valid Kerberos ticket in your credentials cache.

You can examine the Kerberos tickets currently in your credentials cache by running the klist command. You can obtain a ticket by running the kinit command and either specifying a keytab file containing credentials, or entering the password for your principal.

Example to hdfs super user lost privilege issue after enabling Kerberos security

[hdfs@cdh-vm ~]$ kdestroy

[hdfs@cdh-vm ~]$ klist

klist: No credentials cache found (filename: /tmp/krb5cc_994)

[hdfs@cdh-vm ~]$ hdfs dfs -mkdir /user/donghua/hdfs

17/12/01 06:25:16 WARN security.UserGroupInformation: PriviledgedActionException as:hdfs (auth:KERBEROS) cause:javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

17/12/01 06:25:16 WARN ipc.Client: Exception encountered while connecting to the server : javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

17/12/01 06:25:16 WARN security.UserGroupInformation: PriviledgedActionException as:hdfs (auth:KERBEROS) cause:java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

mkdir: Failed on local exception: java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]; Host Details : local host is: "cdh-vm.dbaglobe.com/192.168.56.10"; destination host is: "cdh-vm.dbaglobe.com":8020;

[root@cdh-vm ~]# find /var/run/cloudera-scm-agent/process/ -name hdfs.keytab

/var/run/cloudera-scm-agent/process/65-hdfs-SECONDARYNAMENODE/hdfs.keytab

/var/run/cloudera-scm-agent/process/64-hdfs-NAMENODE/hdfs.keytab

/var/run/cloudera-scm-agent/process/63-hdfs-DATANODE/hdfs.keytab

[hdfs@cdh-vm ~]$ klist -e -k -t /var/run/cloudera-scm-agent/process/63-hdfs-DATANODE/hdfs.keytab

Keytab name: FILE:/var/run/cloudera-scm-agent/process/63-hdfs-DATANODE/hdfs.keytab

KVNO Timestamp Principal

---- ------------------- ------------------------------------------------------

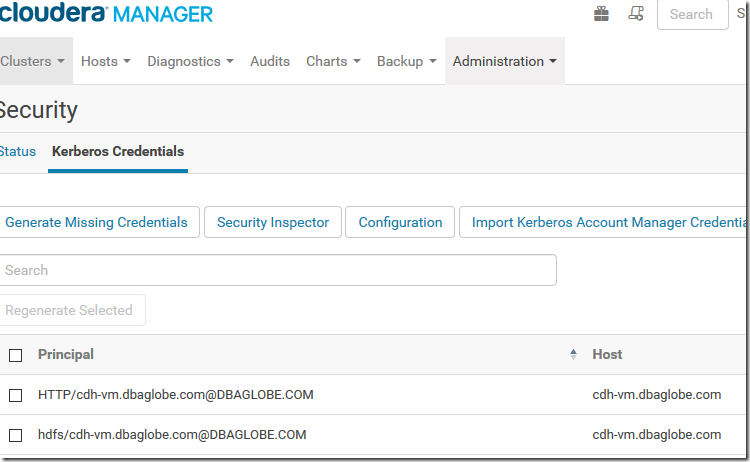

2 12/01/2017 05:38:03 HTTP/cdh-vm.dbaglobe.com@DBAGLOBE.COM (aes256-cts-hmac-sha1-96)

2 12/01/2017 05:38:03 HTTP/cdh-vm.dbaglobe.com@DBAGLOBE.COM (aes128-cts-hmac-sha1-96)

2 12/01/2017 05:38:03 hdfs/cdh-vm.dbaglobe.com@DBAGLOBE.COM (aes256-cts-hmac-sha1-96)

2 12/01/2017 05:38:03 hdfs/cdh-vm.dbaglobe.com@DBAGLOBE.COM (aes128-cts-hmac-sha1-96)

[hdfs@cdh-vm ~]$ kinit -k –t /var/run/cloudera-scm-agent/process/63-hdfs-DATANODE/hdfs.keytab hdfs/cdh-vm.dbaglobe.com@DBAGLOBE.COM

keytab specified, forcing -k

[hdfs@cdh-vm ~]$ klist

Ticket cache: FILE:/tmp/krb5cc_994

Default principal: hdfs/cdh-vm.dbaglobe.com@DBAGLOBE.COM

Valid starting Expires Service principal

12/01/2017 06:28:17 12/02/2017 06:28:17 krbtgt/DBAGLOBE.COM@DBAGLOBE.COM

[hdfs@cdh-vm ~]$ hdfs dfs -mkdir /user/donghua/hdfs

[hdfs@cdh-vm ~]$ hdfs dfs -ls /user/donghua/

Found 2 items

-rw-r--r-- 1 donghua donghua 46837865 2017-12-01 06:07 /user/donghua/IOTDataDemo.csv

drwxr-xr-x - hdfs donghua 0 2017-12-01 06:28 /user/donghua/hdfs

No comments:

Post a Comment